Jeff Paul / Product Designer & Researcher

Building native solutions for

writing AI prompts and actions

AI by Zapier in the Editor

Role

Senior Product Designer. Responsible for designing the feature end-to-end. Worked closely with product and engineering counterparts for shaping and delivery.

Team

v1: Initial tiger team of Director of Product, Lead UX Researcher, and 2 Staff Engineers.

v2: Product Manager and Engineering Manager (core EPD), and 5 Front-end/Full-stack Engineers.

Timeline

2024–25. v1 released in Q3 2024 for initial MVP, v2 released Q2 2025. Many design iterations and improvements throughout.

Context

AI adoption was growing rapidly, yet we continued to see untapped potential

AI adoption was gaining significant momentum, especially among SaaS products and workflow automation tools in particular. However, we noted that only 1% of all Zaps on the platform had incorporated an AI app in their workflow. More users wanted to employ AI, but were unsure of how to get started with this new technology.

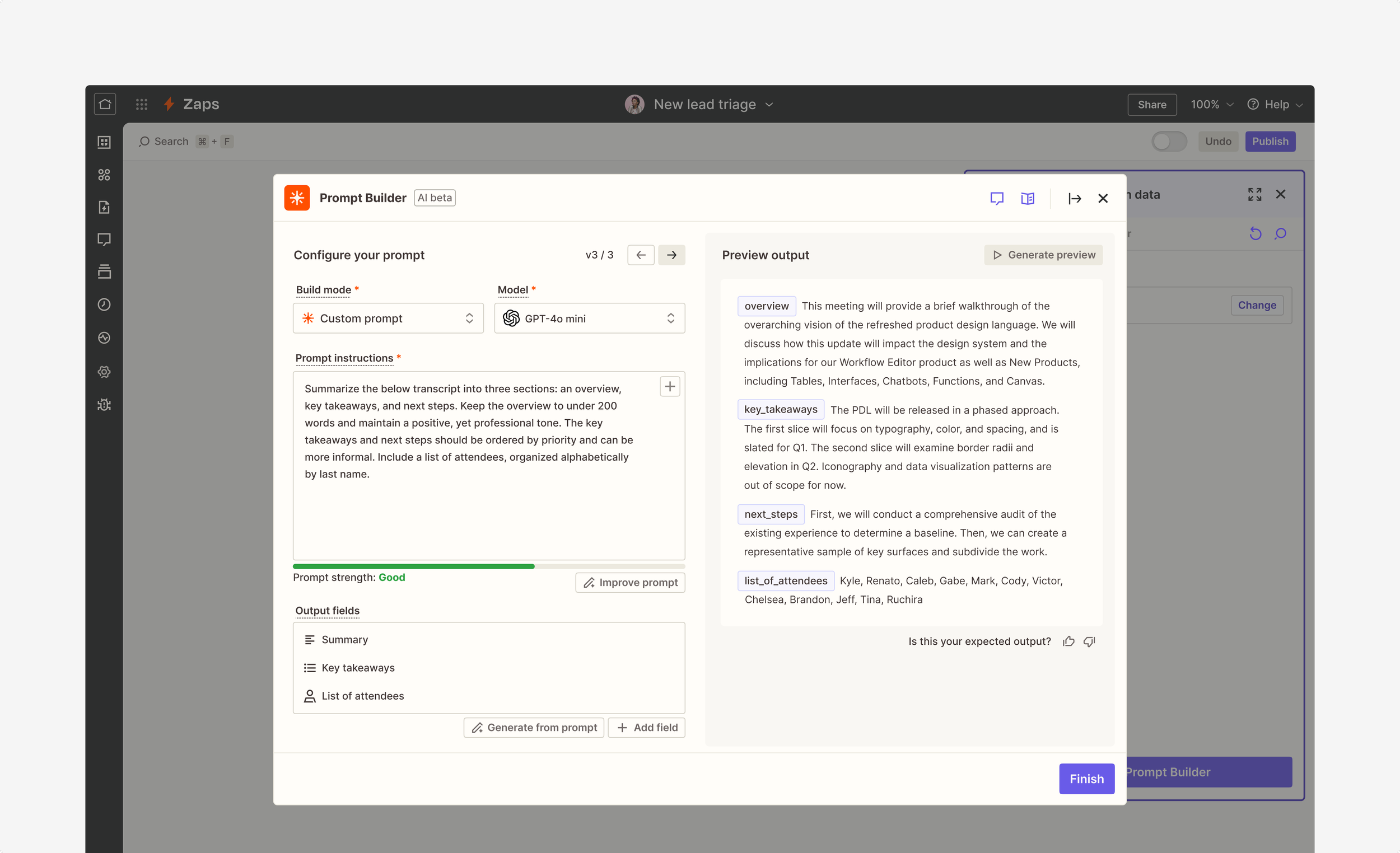

Examples of AI steps available from top AI apps on Zapier, including OpenAI (ChatGPT), Anthropic (Claude), and Google (Gemini)

Problem

We wanted to enable users to better harness the power of AI while minimizing any barriers

Digging deeper, we recognized two main problem areas: discoverability and usability. User research reported that customers often struggled to understand how they might use AI in their workflows or find the right use case. They also described the confusion around selecting the right provider and model for the job, the technical complexities of connecting their unique key to their account, and writing a prompt robust and detailed enough to yield their desired output.

I wondered how I might design a tool that obscures the complexity of prompt engineering while introducing basic concepts like inputs and outputs. How might I inspire (rather than intimidate) users with the prospect of using AI? How might I reduce unnecessary decision-making for users to get started?

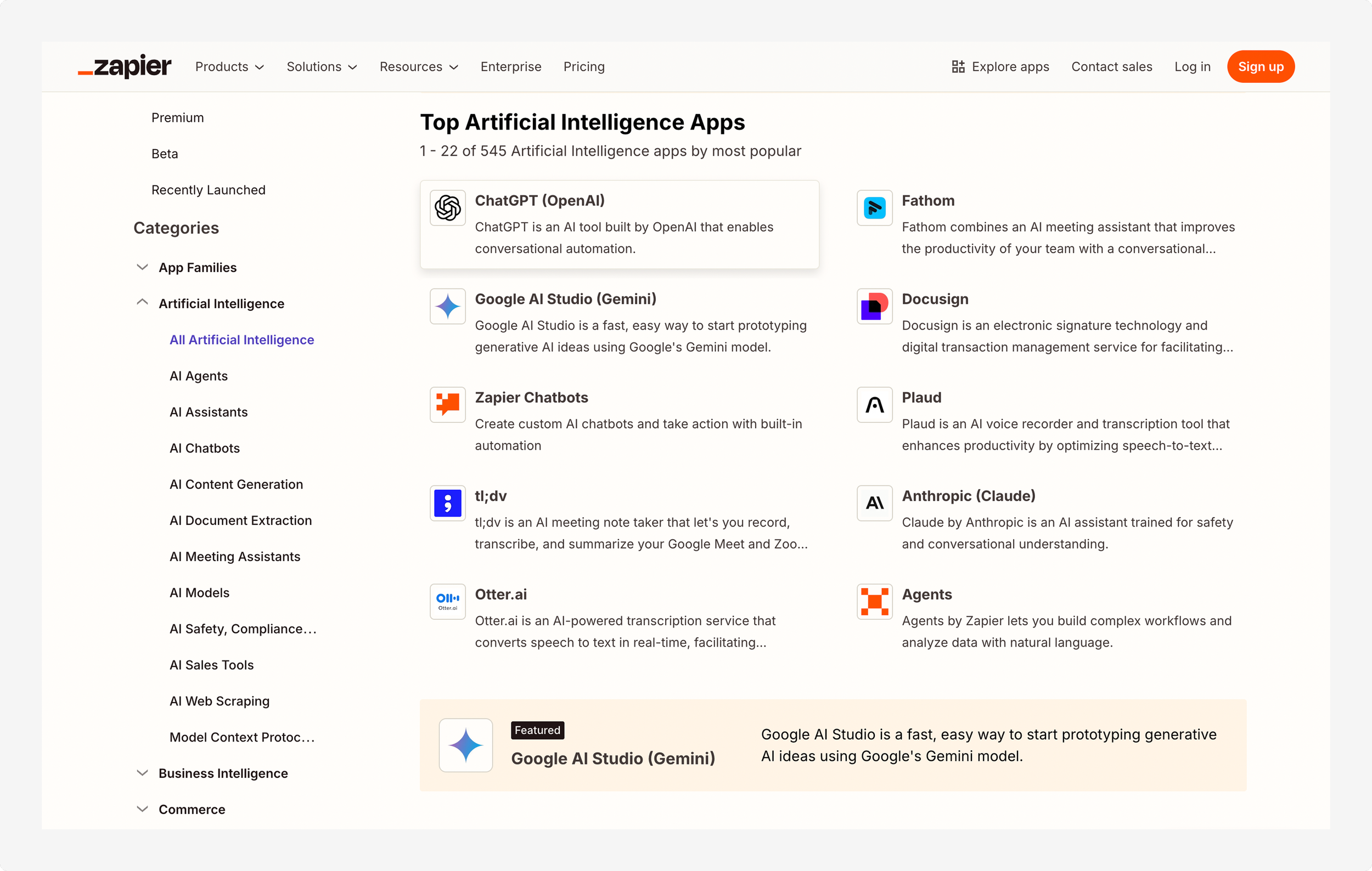

A snapshot of available AI apps on Zapier from an external marketing page geared toward discovery

Approach

Scoping down allowed us to ship quickly and validate a design direction

I did a brief audit of other AI products and tools as part of a project kickoff. I was particularly interested in gaining a sense of what UI patterns and standards were emerging for users to best interact with AI.

A sample of AI products with unique interfaces for chat and image generation

As I began design exploration, Product already had a solution in mind. They proposed a setup wizard, one that would guide users step-by-step through the process. Given the iterative way in which a user would need to write and test a prompt back and forth, and based on the quick audit I did earlier, I argued a setup wizard would not be the best approach. After further exploration, I suggested we adopt a similar pattern to the recently built Focus Mode, which would give users more space to build iteratively.

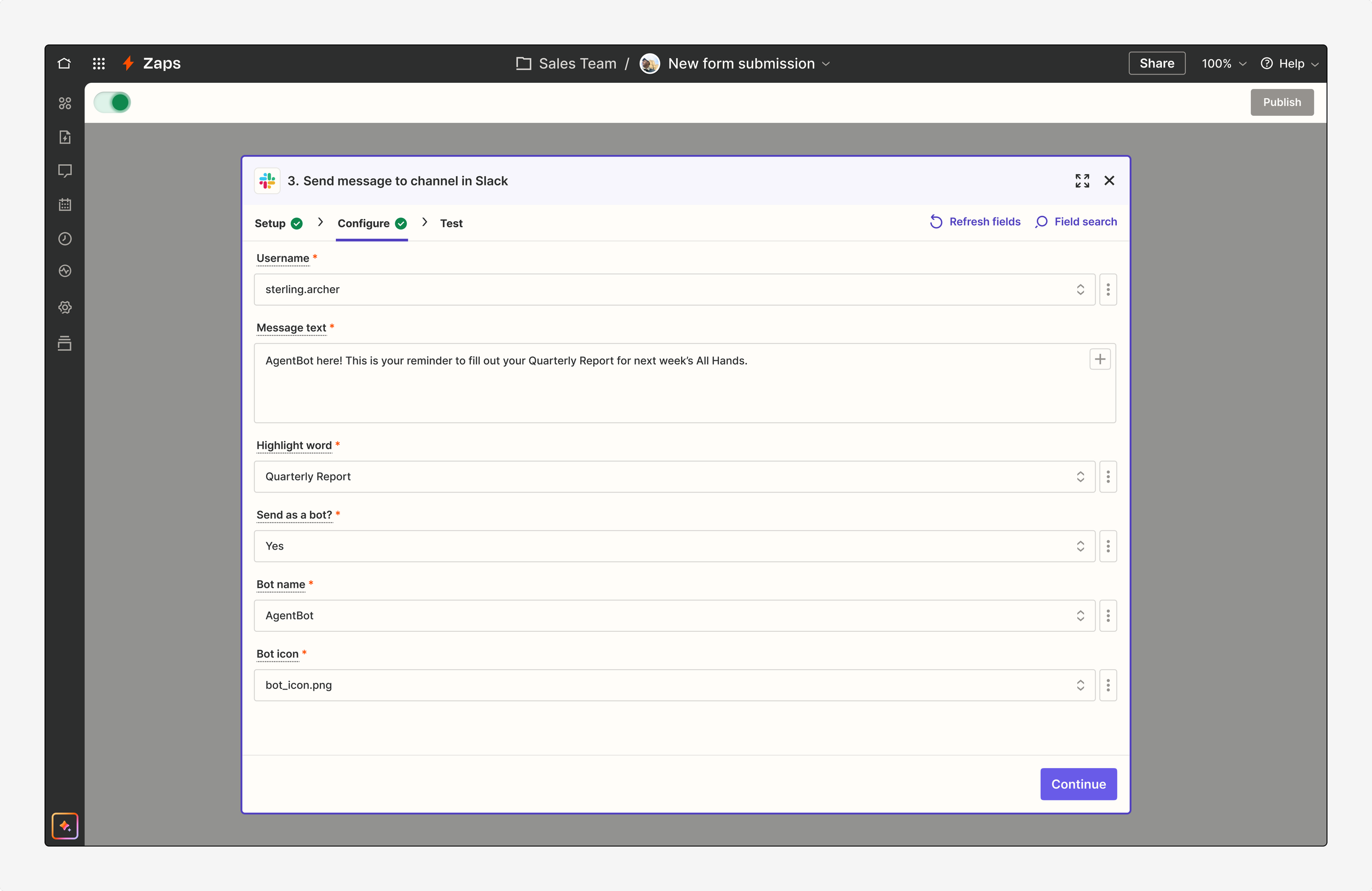

An example of Focus Mode for a Slack action step in the Editor

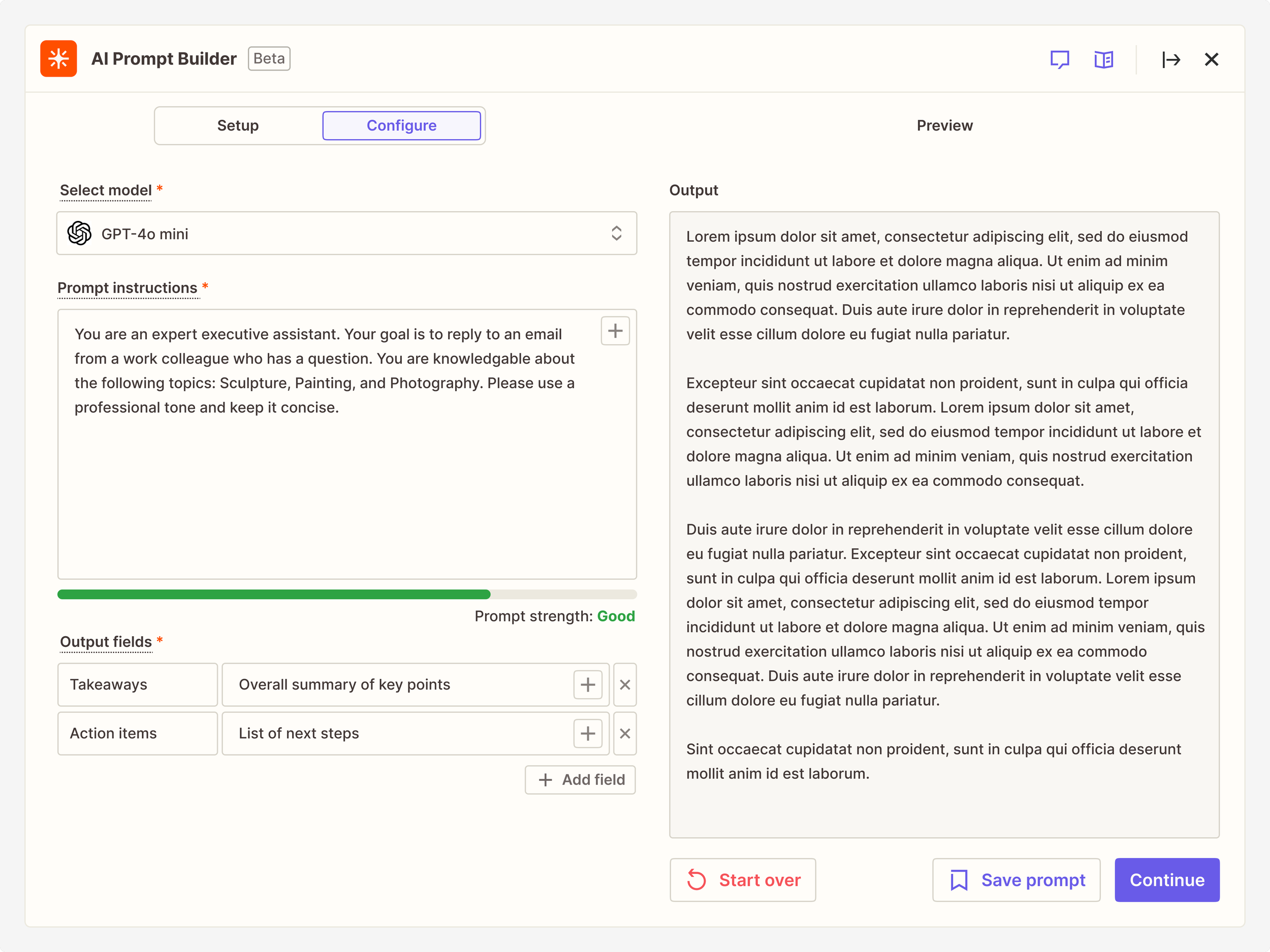

As such, v1 notably introduced a split column view, with a prompt assistant to the left and the ability to edit a prompt on the right. The prompt assistant allowed users to select from a series of templates to get started, or simply describe what they wanted to occur. If the latter was chosen, there was a “Generate prompt” button to enhance the ask and better align with a format favored by the LLM. I also included an option for output fields, which would enable users to define structured data for the LLM to return.

To address our usability concerns, v1 was powered by OpenAI’s GPT 4o-mini model as default, free of charge. This eliminated the need for users to have separate credentials to authenticate — everything they needed was already included.

The MVP of AI by Zapier, which adopted the same dimensions and behavior as Focus Mode in the Editor

We were on a time-crunch, eager to release an initial MVP of AI by Zapier before ZapConnect, our annual company-wide conference. We pulled together a tiger team, resulting in a new built-in tool as part of the workflow Editor.

In the weeks post-launch, we saw aggressive adoption. This v1 of AI by Zapier grew to account for about 25% of all ChatGPT usage on the platform. Our novel UX solution also proved successful, with a 15% higher rate of completion when compared to a standalone ChatGPT step. With such positive signal, there was a strong appetite for what we were building among the leadership team. They encouraged us to continue to invest in this initiative, develop more robust functionality, and focus on a more polished experience.

Iterative releases enabled more enhancements, confirm our investment in the space, and incorporate the latest developments

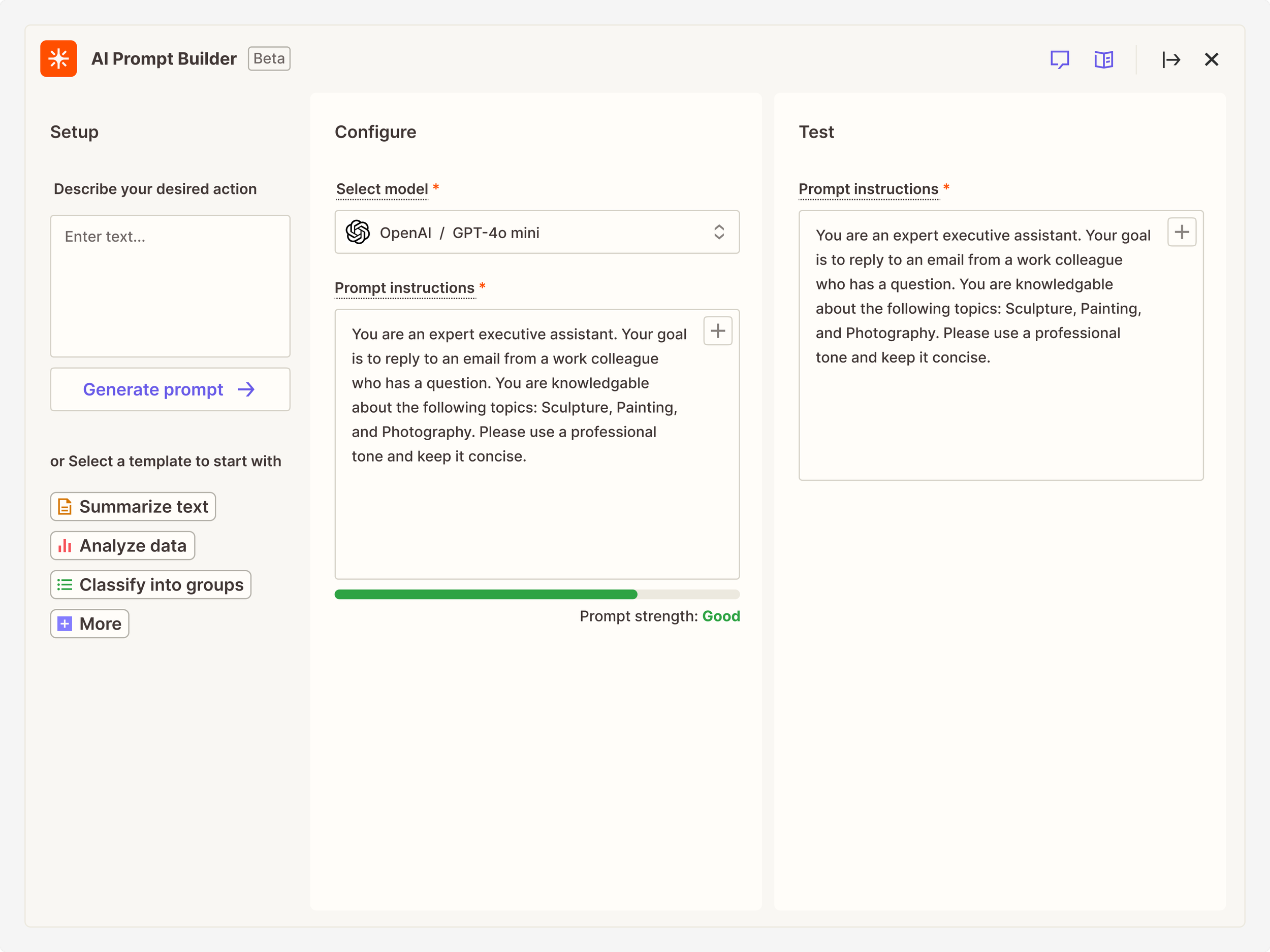

Despite the early wins of our first release, there was plenty of room for improvement. Our Lead UX Researcher conducted usability testing with 10 participants to gain a better understanding of what we could tackle next. We learned that the AI-generated prompt was widely loved and that the templates, though useful, were more of a starting point for inspiration instead of a complete solution. We also gathered that users were sometimes confused about the relationship between sections and their implied back and forth nature. Input fields, displayed as mapped pills within the prompt, were often unclear to users. I wondered, how might I redesign our standard input and output field patterns to be more intuitive?

Another theme emerged: control. Users wanted the ability to select a model, rather than have a default of GPT 4o-mini (which was hidden in v1). This extended beyond OpenAI – users wanted options for other providers as well. I was curious what other features I could build into this tool to give users greater control over these AI results in their workflow.

A Figjam board of usability findings and recommendations from our Lead UX Researcher

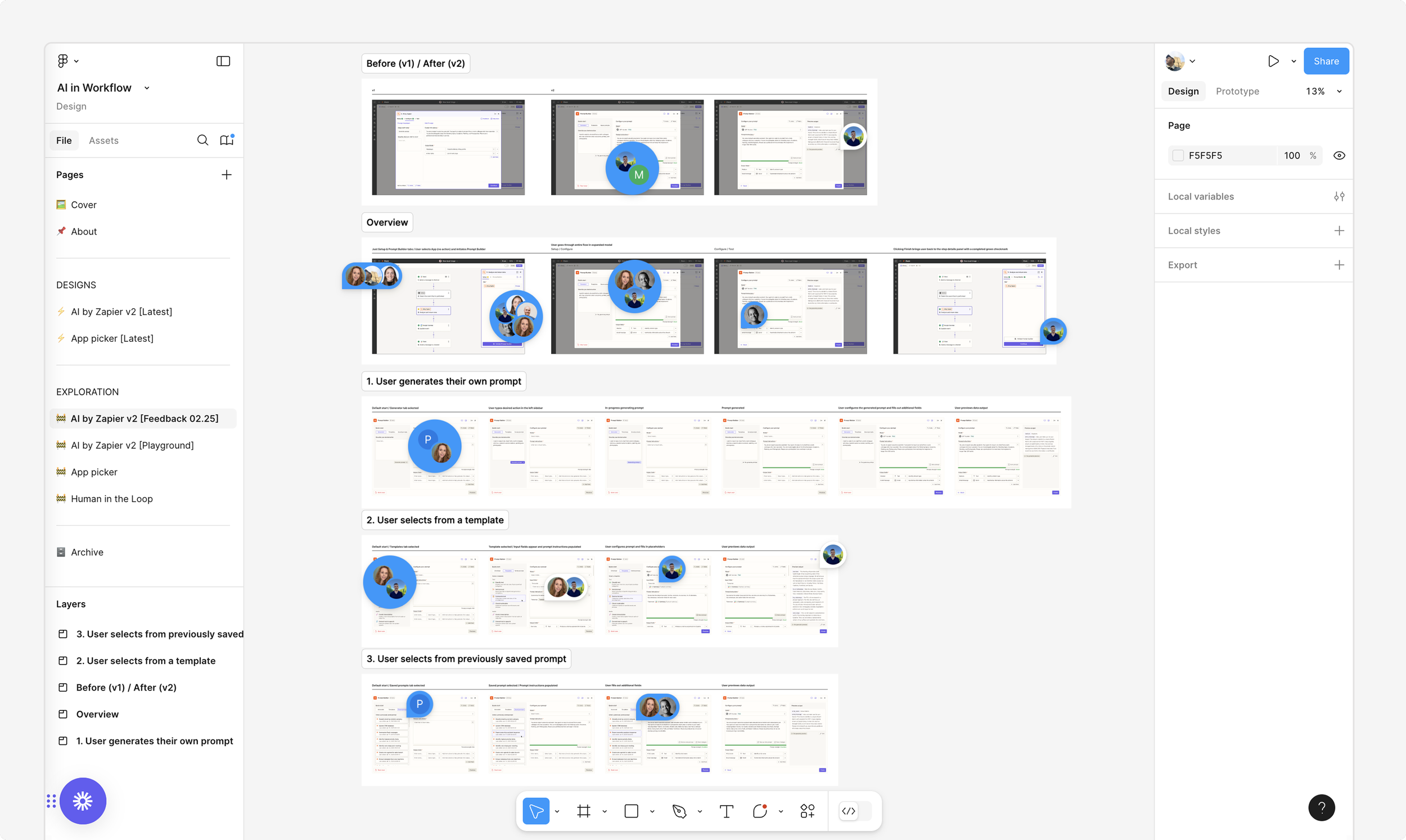

With plenty of insights, I revisited my original designs. I wanted to maintain the split column view, but needed to better convey the sequential (and sometimes circuitous) nature of crafting AI prompts. I explored numerous configurations: a tabbed approach for the general assistant vs. templates, a three column layout, a singular input, and various ways of showing the setup and configure substeps side-by-side.

Option 1: Dual tabs for the assistant vs. templates

Option 2: Adding a third tab for saved prompts

Option 3: Trying a three-column arrangement

Option 4: Showing all setup options together on the left panel without tabs

After converging on a design, I presented my initial recommendation to a group of stakeholders and the leadership team for feedback. I later encouraged everyone to leave Figma comments as a way to address and track opinions.

Stakeholders across Product, Design, and Engineering weighing in on the new proposal

A major pivot moment unlocked a series of new explorations better aligned with user behaviors

Despite the shareout, I still wasn’t convinced of this direction. A epiphany occurred, however, when I realized that users would likely find more value in seeing their configuration details side-by-side with a preview of their output rather than having that on another screen. By uniting the setup with its configuration, I better emphasized the most important aspects of completing a prompt and better aligned with the expected user behavior of needing to go back-and-forth to tweak a prompt. Even so, many more configuration explorations ensued.

Option 1: Setup and configure tabs, with Preview on the left side

Option 2: Collapsing setup and configure into a single view

Option 3: Giving more prominence to configuration

Option 4: Reconsidering how to visualize build mode and additional controls

Solution

The unified prompt builder seamlessly integrated into the Editor and delivered advanced AI functionality

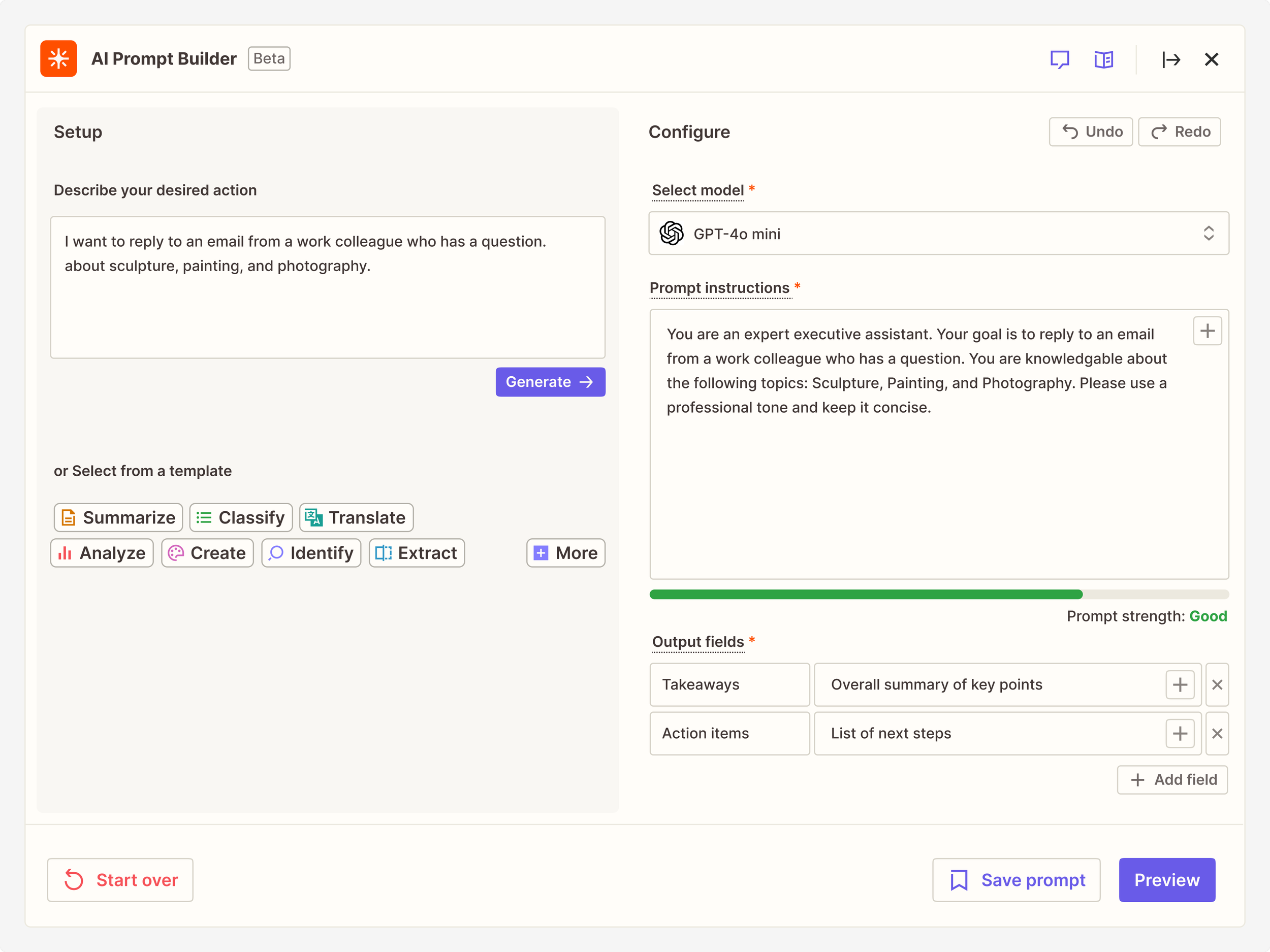

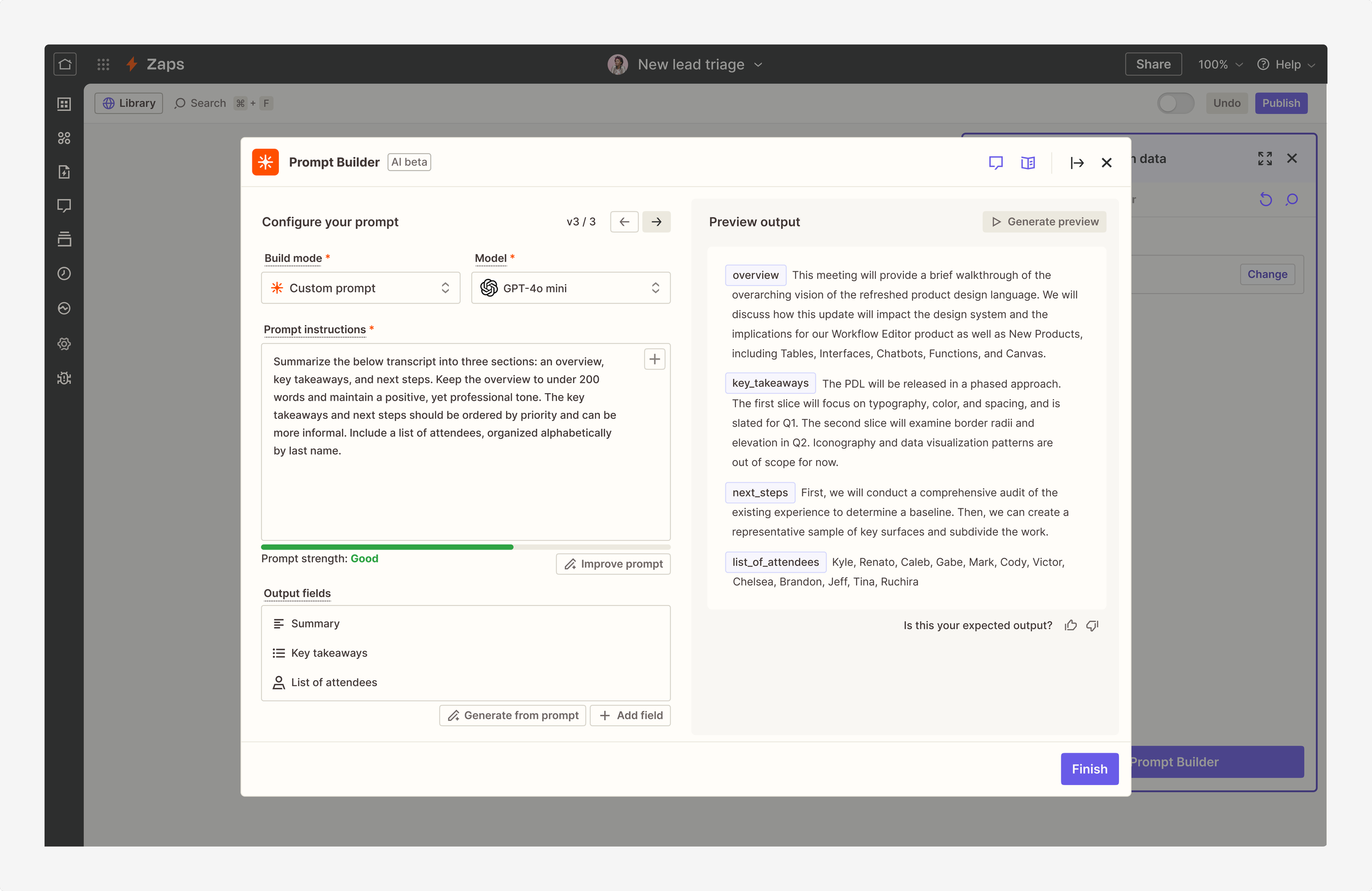

Ultimately, we launched a powerful AI prompt builder, much-improved from our initial MVP and one better aligned to the needs of our users. In addition to getting the structure of the interface just right, I introduced a series of new features and capabilities, some in line with emerging industry standards and others gleaned from speaking to users.

The final configuration of the improved AI by Zapier interface

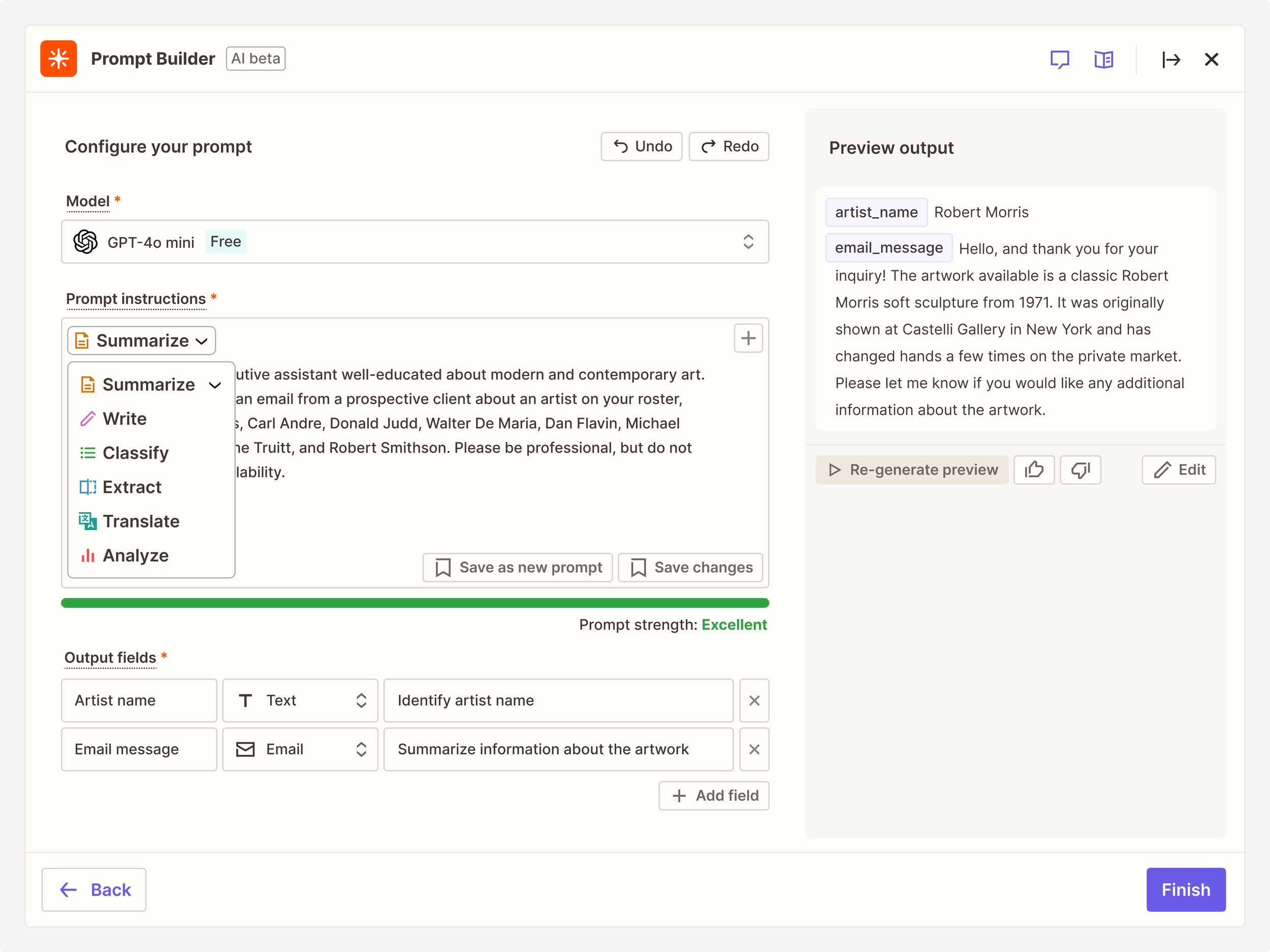

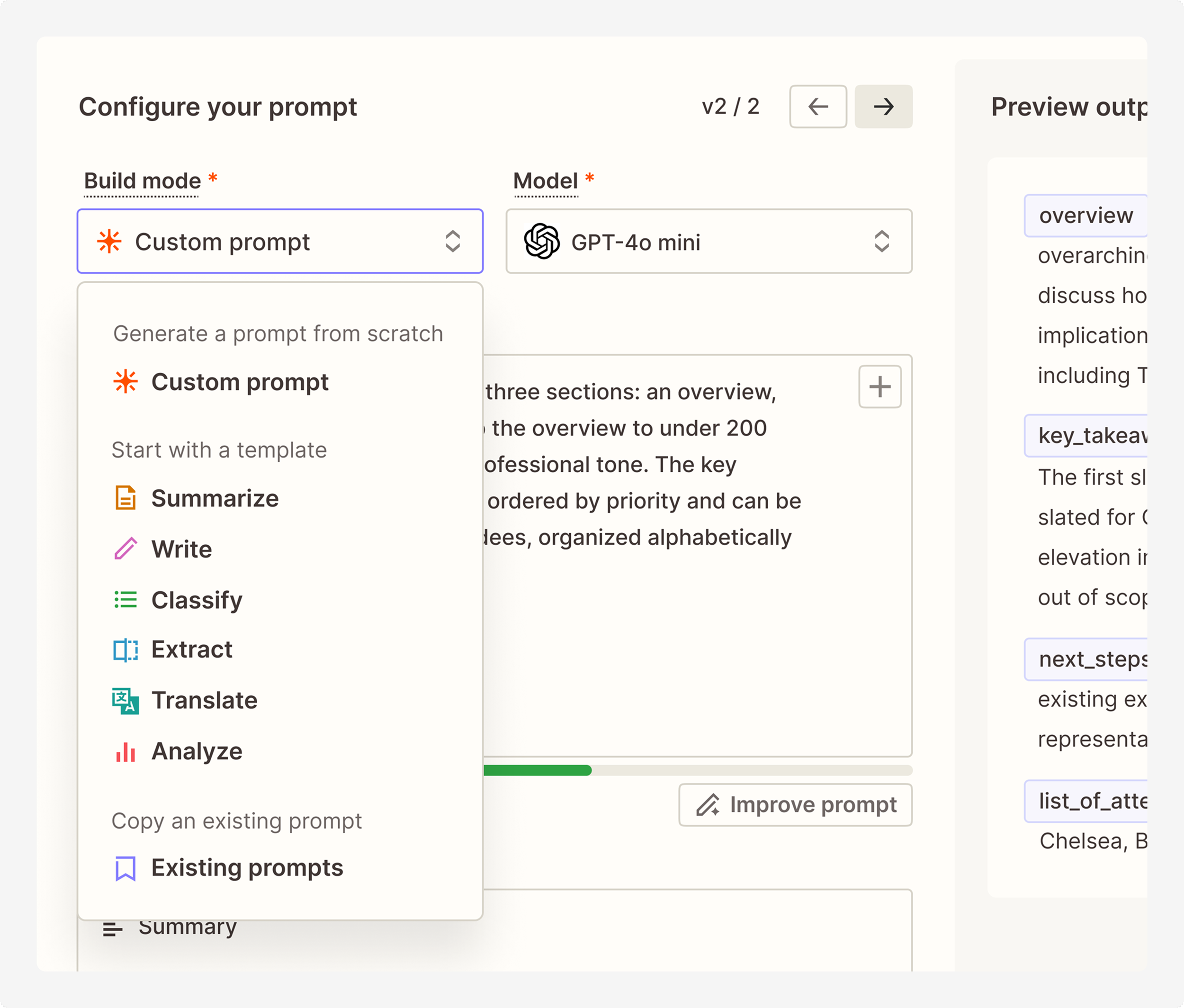

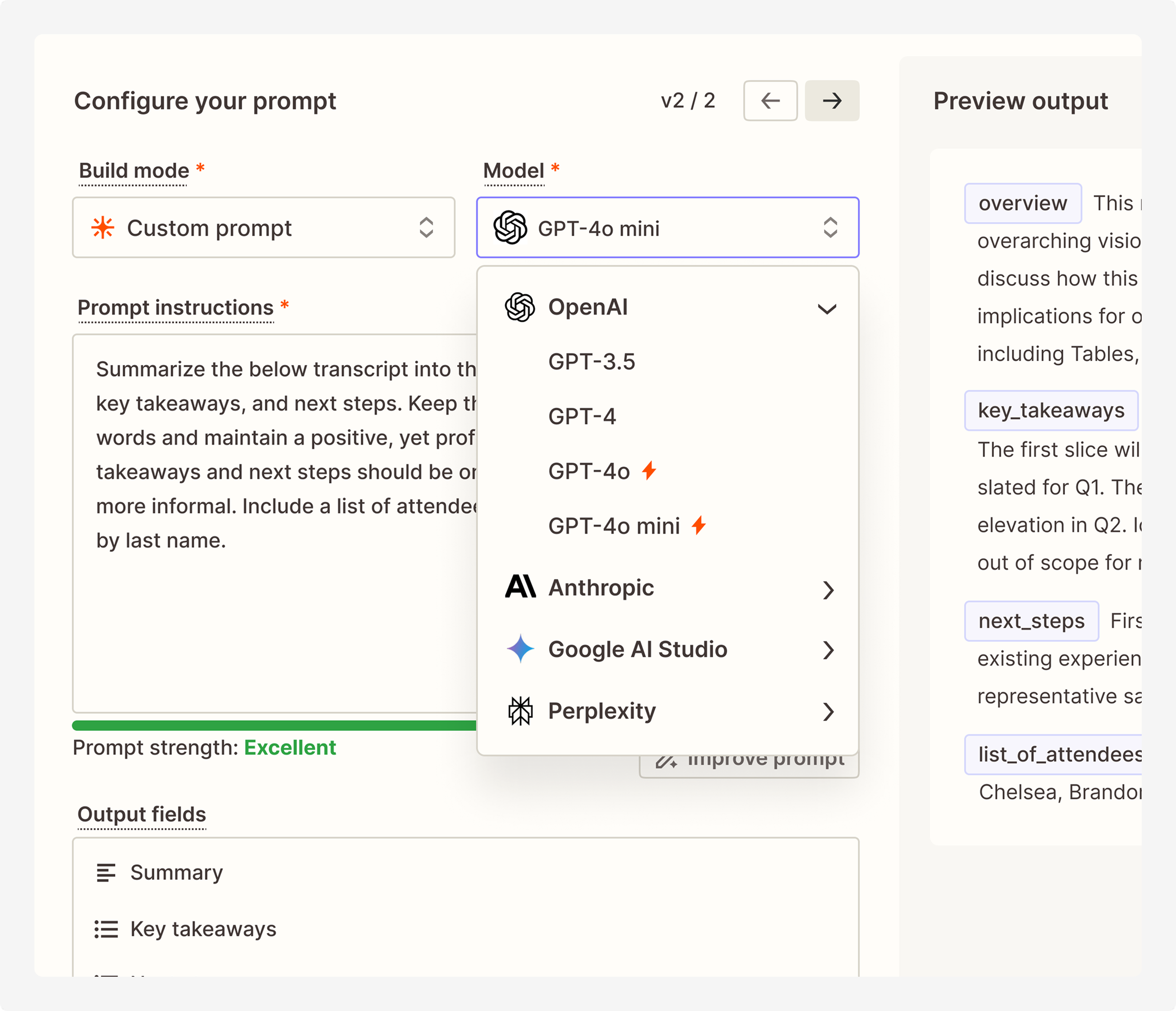

Simplifying build mode and enabling provider and model selection

Build mode represented the first step in AI by Zapier, where users selected whether they wanted to create a custom prompt or use a template. I re-imagined the template structure to be more action-oriented (e.g. “Classify” or “Translate”) instead of the highly specific prompts introduced originally (e.g. “Write a blog post”), and added the ability to analyze new media types, including images, audio, and video. I also crafted an easy way for users to retrieve saved prompts from previous sessions for quick reuse.

In our MVP, there was only one provider and model available, and it was hidden from view. Users can now select and easily change their preferred model across a variety of providers, including OpenAI, Anthropic, and Google Gemini. In this view, I indicated which models were free to use (via Zapier credentials) and which required users to bring their own API key.

Build mode dropdown with various options

Provider and model dropdown

Improving prompt generation and introducing versioning

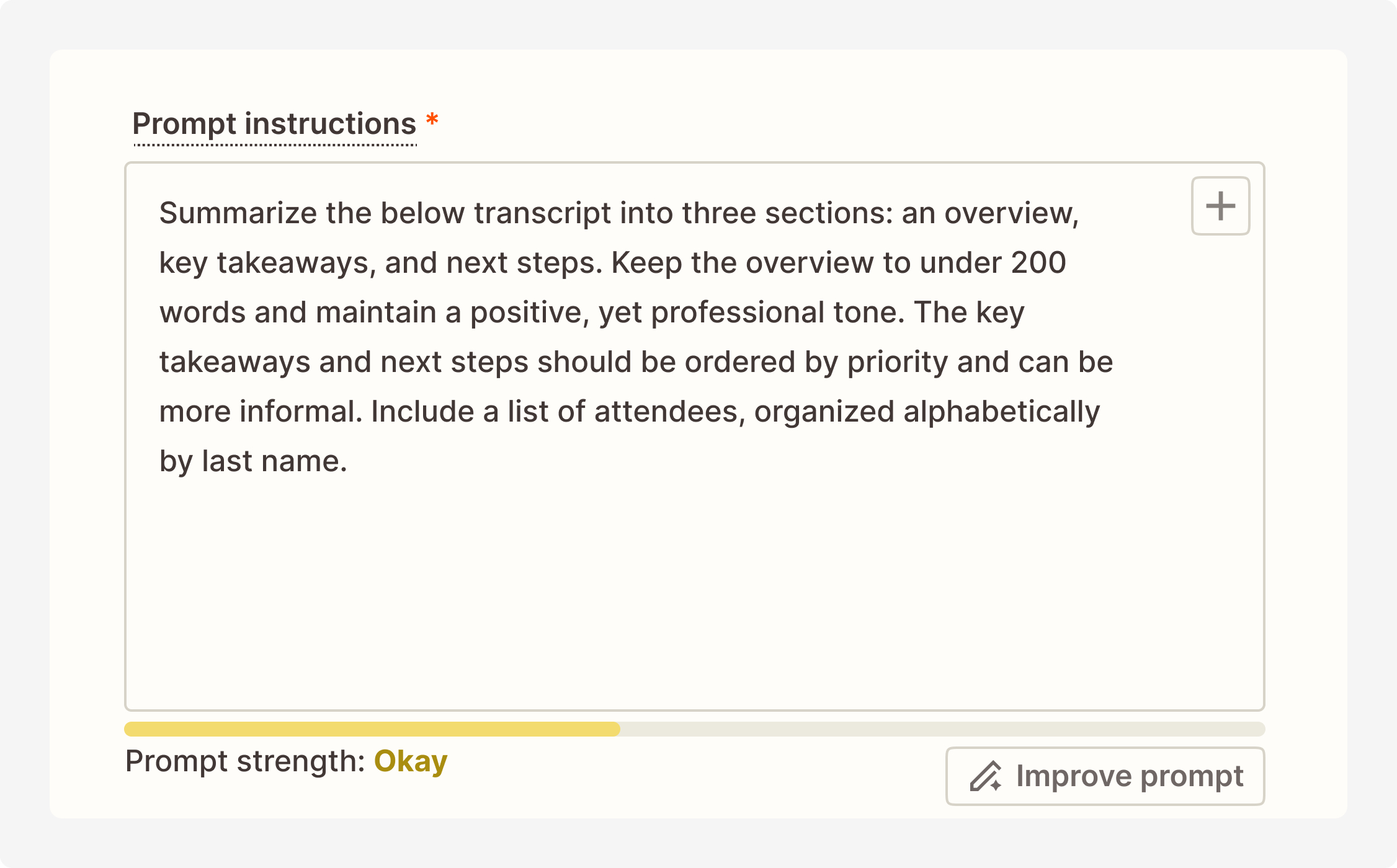

After selecting a build mode, users moved onto crafting their prompt instructions. Users, to the best of their ability, would articulate what action they wanted the model to perform for that step. As users mentioned that creating a detailed prompt was often difficult, I maintained the “Improve prompt” button from our initial MVP, which continued to assist users in optimizing their written requests.

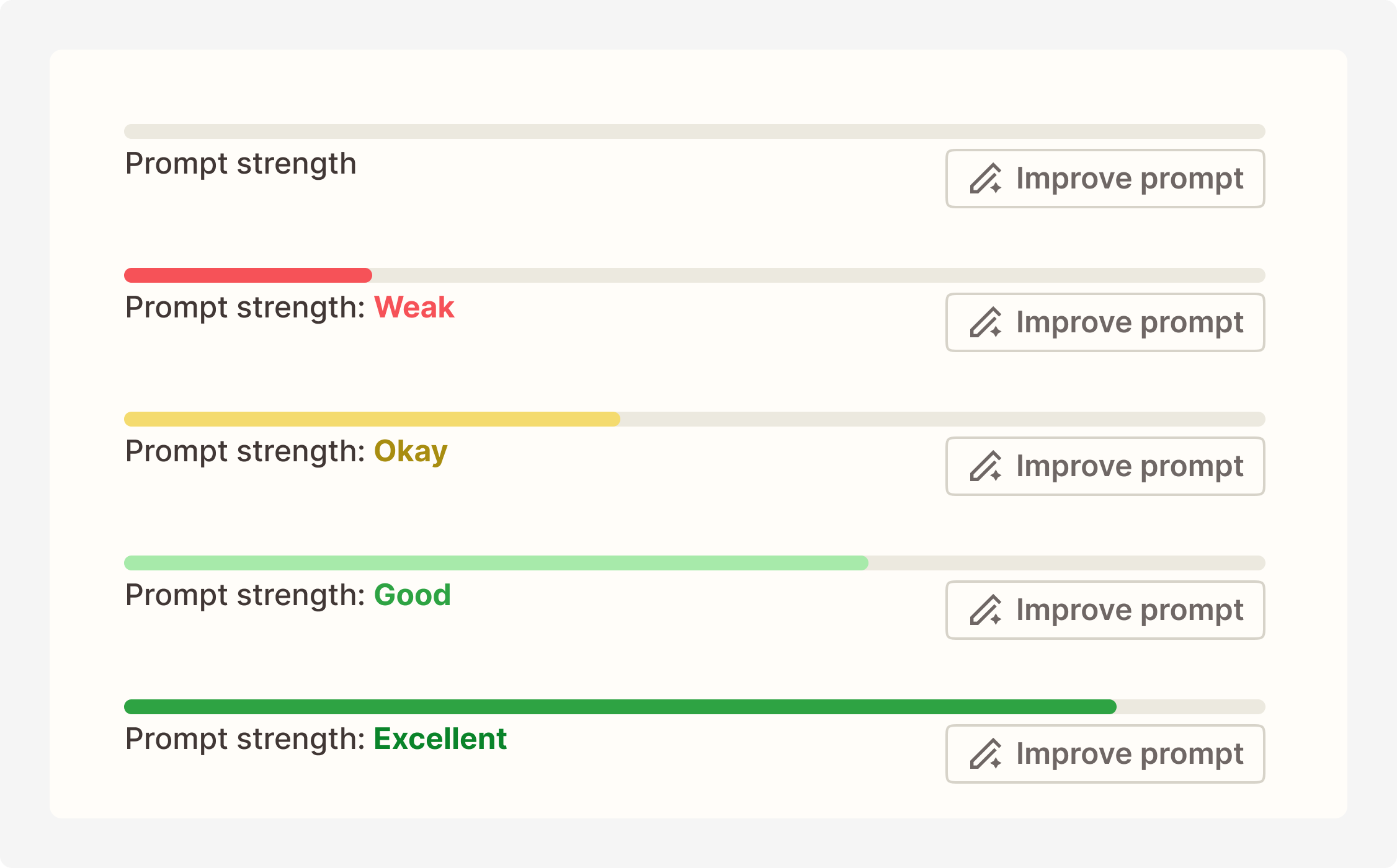

I further introduced a prompt strength component which served as a visual indicator of the enhancements taking place. Users could now see a colored bar that would strengthen as they fleshed out their prompt, with grades ranging from weak to excellent.

Prompt instructions field, with prompt strength and “Improve prompt” button

Various states representing the strength of the prompt instructions

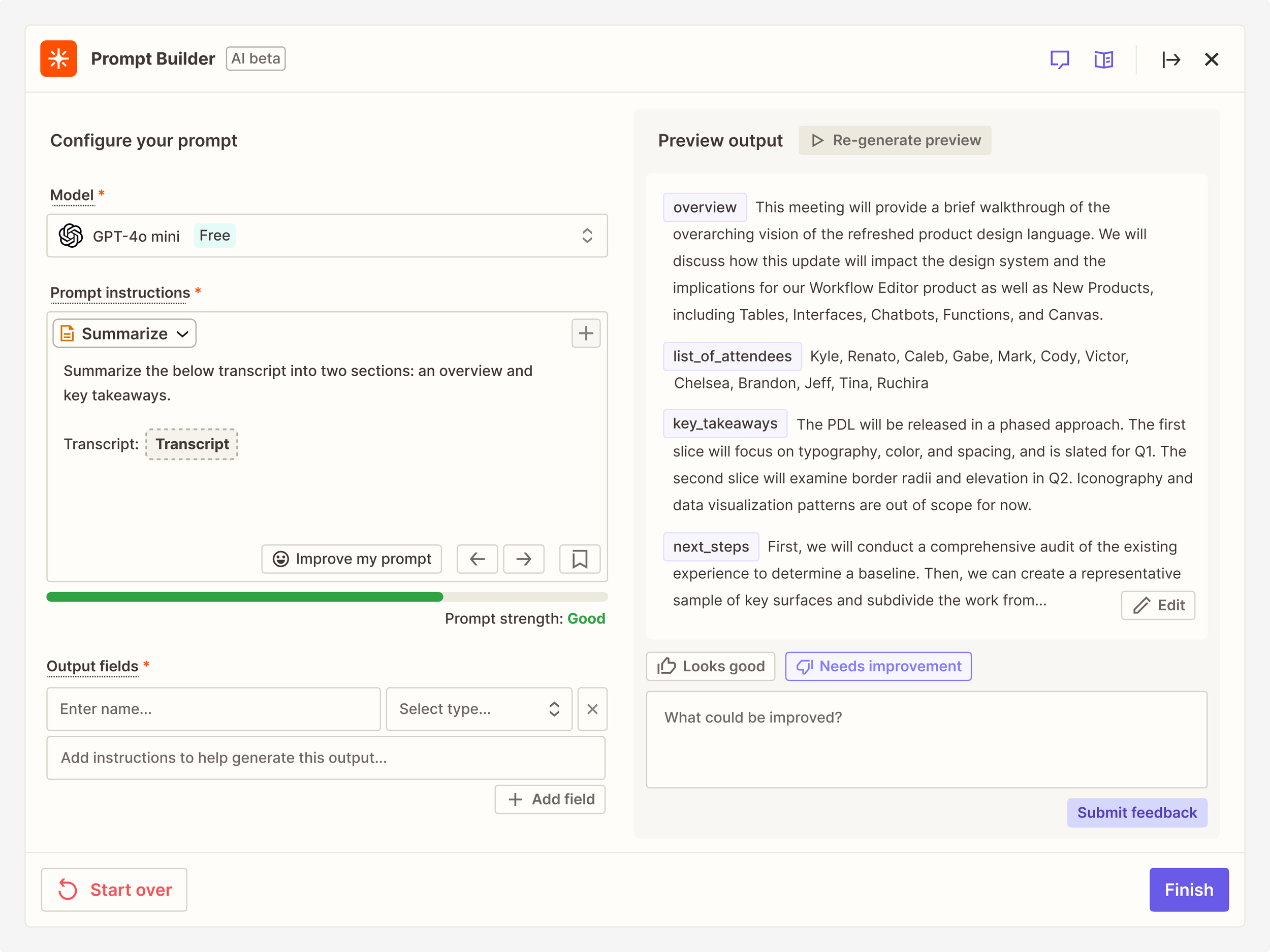

Another major improvement was versioning. Previously, users were able to undo (and redo) an action, only once. This proved problematic if users wanted to reference a prompt earlier in their session. With versions, users could now toggle between an unlimited number of variations by clicking the forward or back arrows. Versions were automatically generated every time AI assistance was used or an output was previewed so users did not have to manually save a version.

Snapshot of versioning with its various states

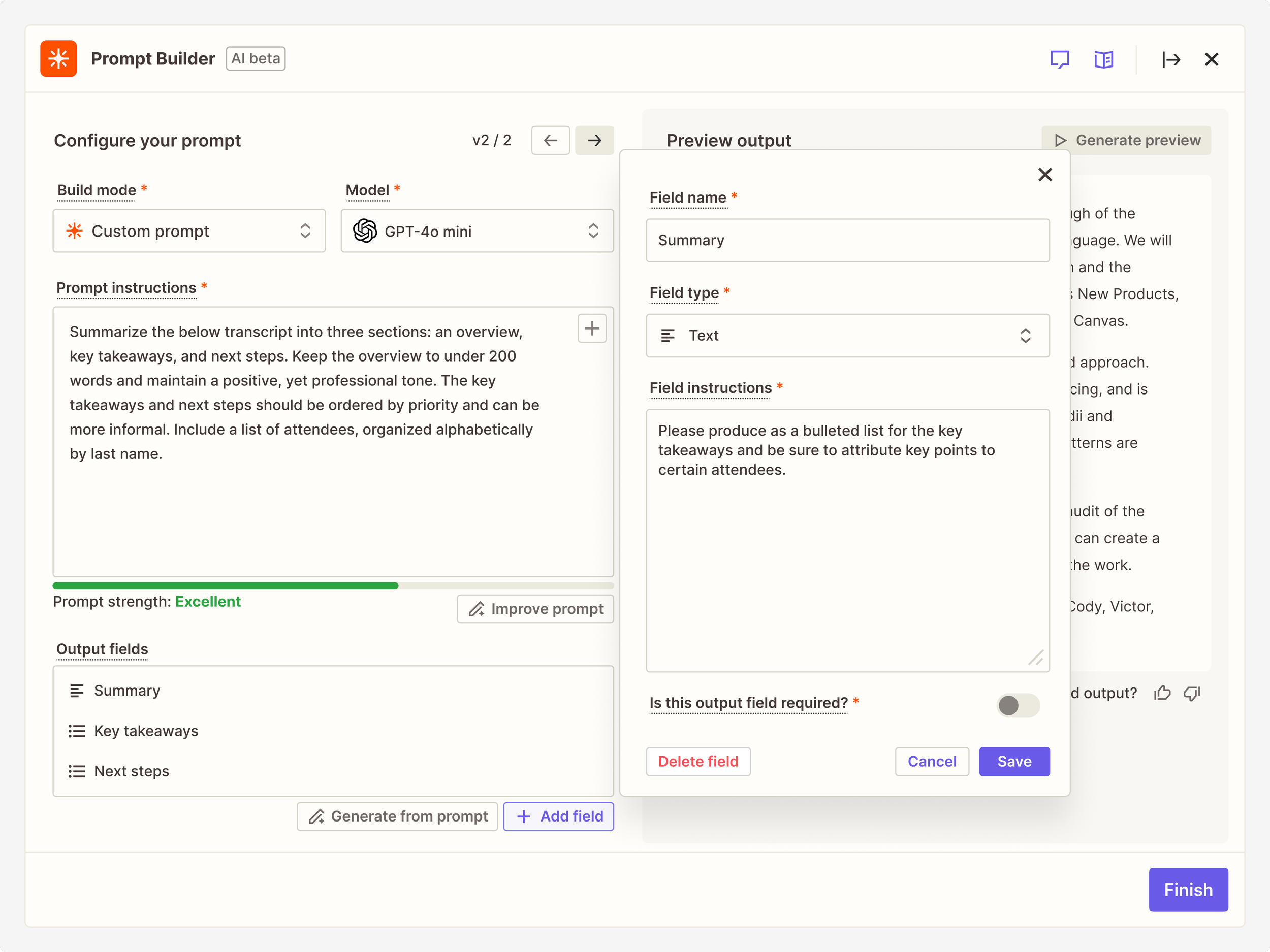

Rethinking outputs, from field configuration to previews

Outputs, or what’s produced from the prompt instructions, is crucial to any AI step. As such, I redesigned the output fields to be more intuitive to create. Clicking “Add field” would summon a menu where users could easily designate a field name and instructions for that specific field. I also included a new section for field type (e.g. number, time, date, etc.), which enabled users to better control how their outputs were structured. I added further controls for the management of output fields so users could edit, refresh, delete, or re-arrange an output field.

Considering how popular the “Improve prompt” button had become in the instructions section, I also added a new generative feature called “Generate from prompt” which would create a number of output fields based on the prompt instructions above.

Menu overlay for adding a new output field

Managing output fields in various states

Closeup of menu overlay with the output field type dropdown selected

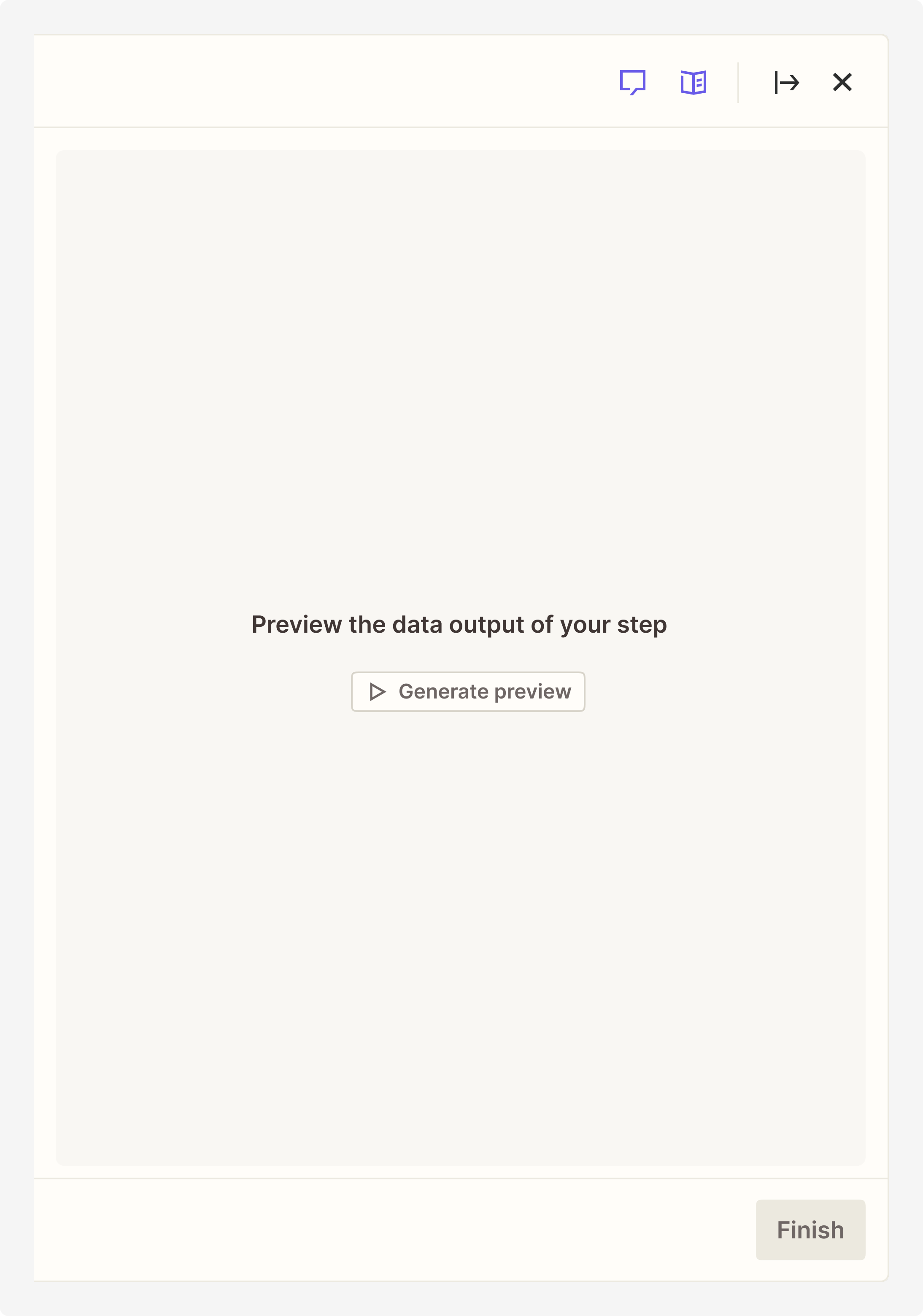

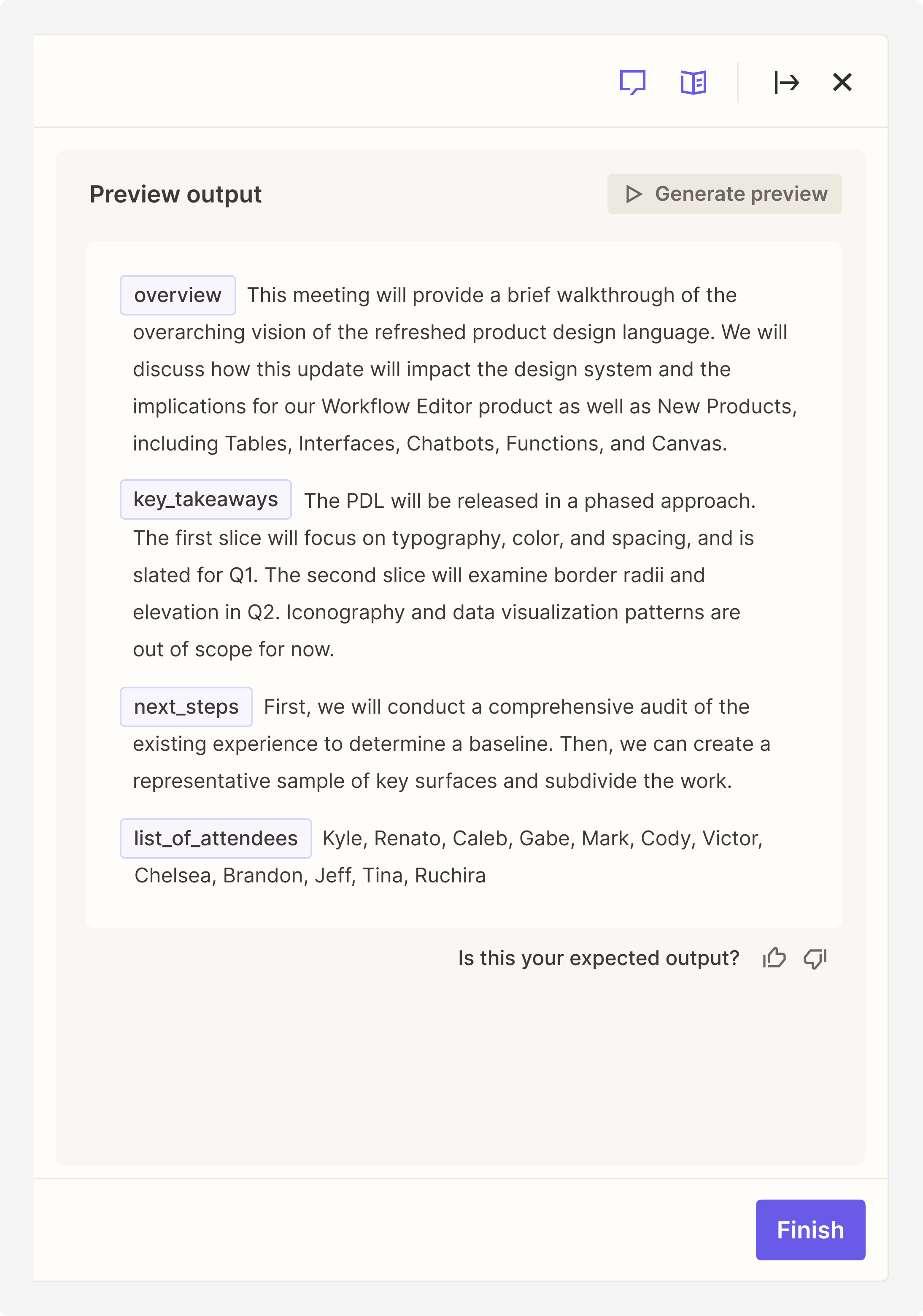

These output fields formed the basis for what would appear on the right side of the interface. When a user clicked the “Generate preview” button, the model would generate a sample preview based on the prompt instructions and the output fields. The output field names and types would directly translate to how the data would be produced and visualized.

Detail of default preview panel, before preview is generated

Detail of preview panel after preview is generated

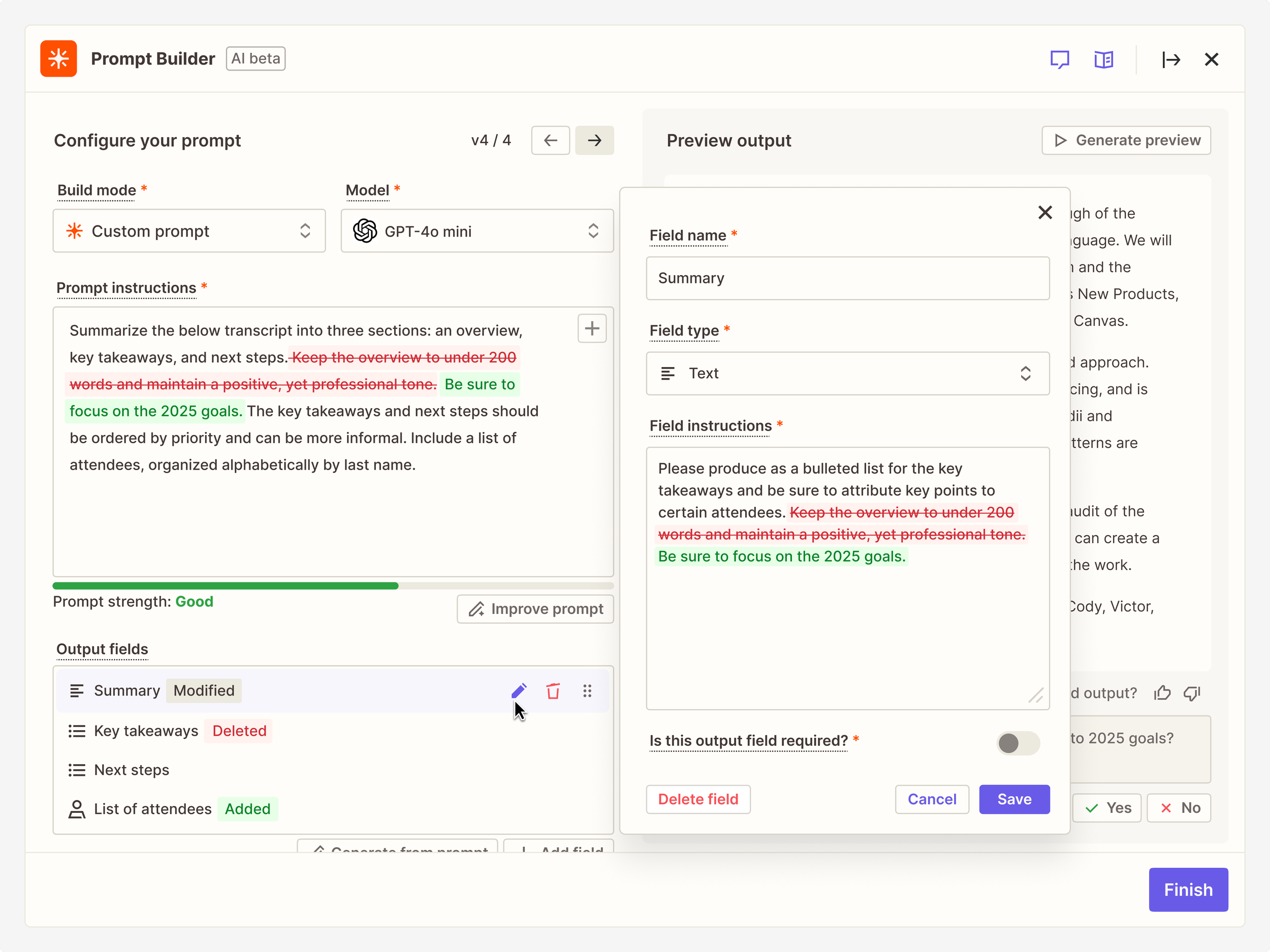

Enabling diff views to further augment prompts via chat

With a preview generated, a user was likely to want to make further tweaks. I introduced a feedback chat mechanism that would encourage users to thumbs up or down the preview based on what they expected, and then type what they wish would change.

Doing so would mark up the prompt instructions and output fields with a diff view, showing in red what is suggested to be removed alongside any new proposed changes in green. A user could then accept or reject these changes.

Diff views of suggested changes across prompt instructions and output fields

Impact

Significant improvements in AI adoption and business-critical measures of success

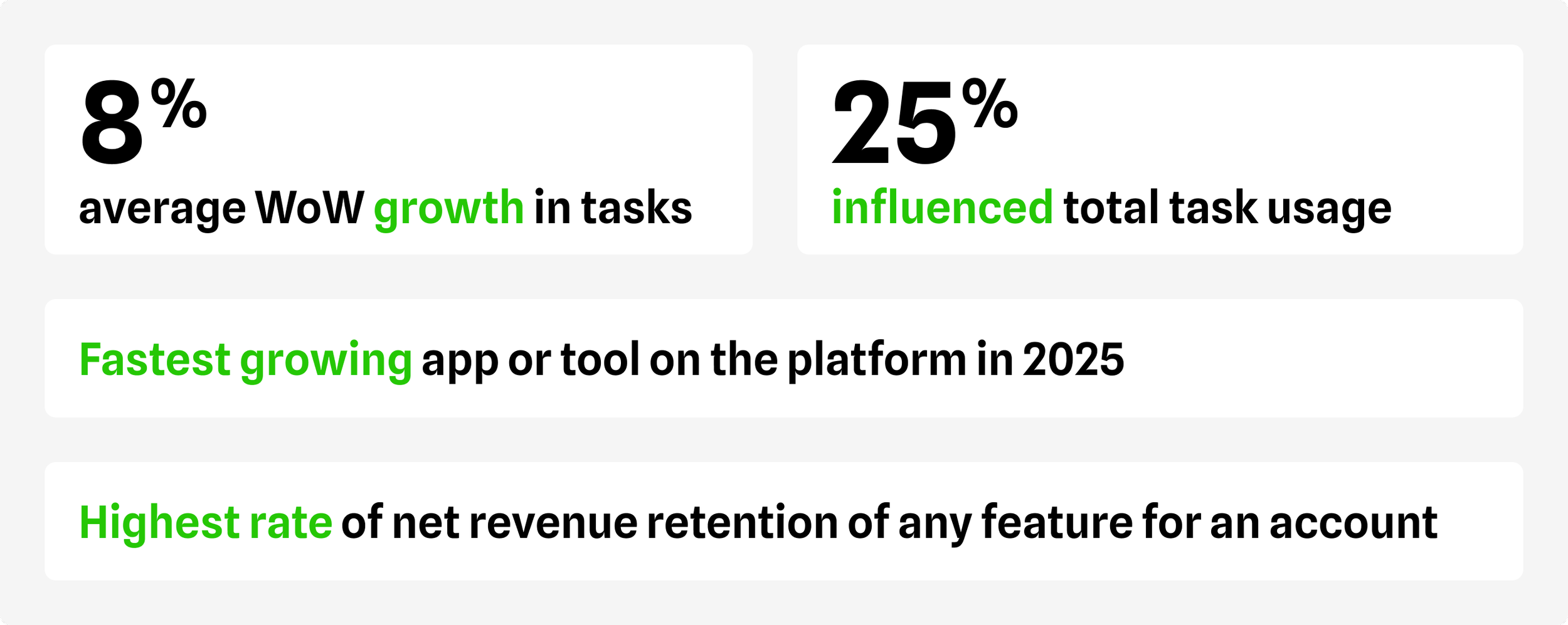

As a result of our efforts, AI by Zapier gained significant adoption, averaging 4% WoW growth in unique Zaps and 8% WoW growth in tasks. It proved to be the #1 fastest growing app or tool on the platform in 2025, entering the top 50 (of 8,000+ apps) for both monthly active users and recurring revenue.

Using AI by Zapier also corresponded to the highest rate of net revenue retention of any feature for an account and influenced about 25% of total task usage on the platform.

Next steps

Small iterations along with major complementary features, including knowledge sources and human-in-the-loop

Despite our success, there was always room for improvement. I worked with my Product Manager to chart a number of fast-follows we could ship after our initial release.

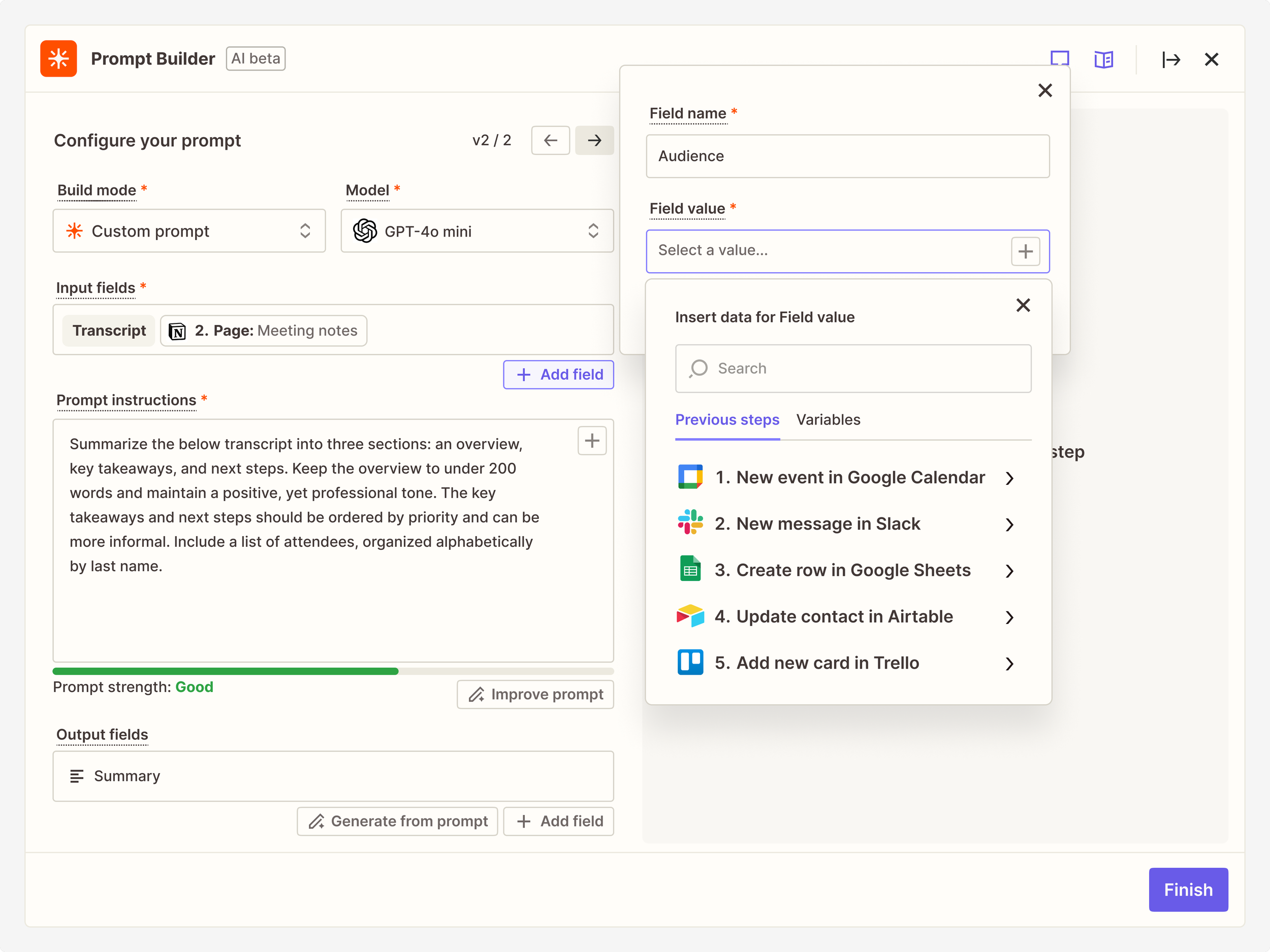

One notable change post-launch included the decision to have input fields as a separate section, similar to how I treated output fields. Users could now articulate an input field name and map a dynamic data value. I learned that doing so would help the model better decipher the prompt instructions, which would in turn yield better results.

An updated view of the AI by Zapier interface with a separate section for input fields

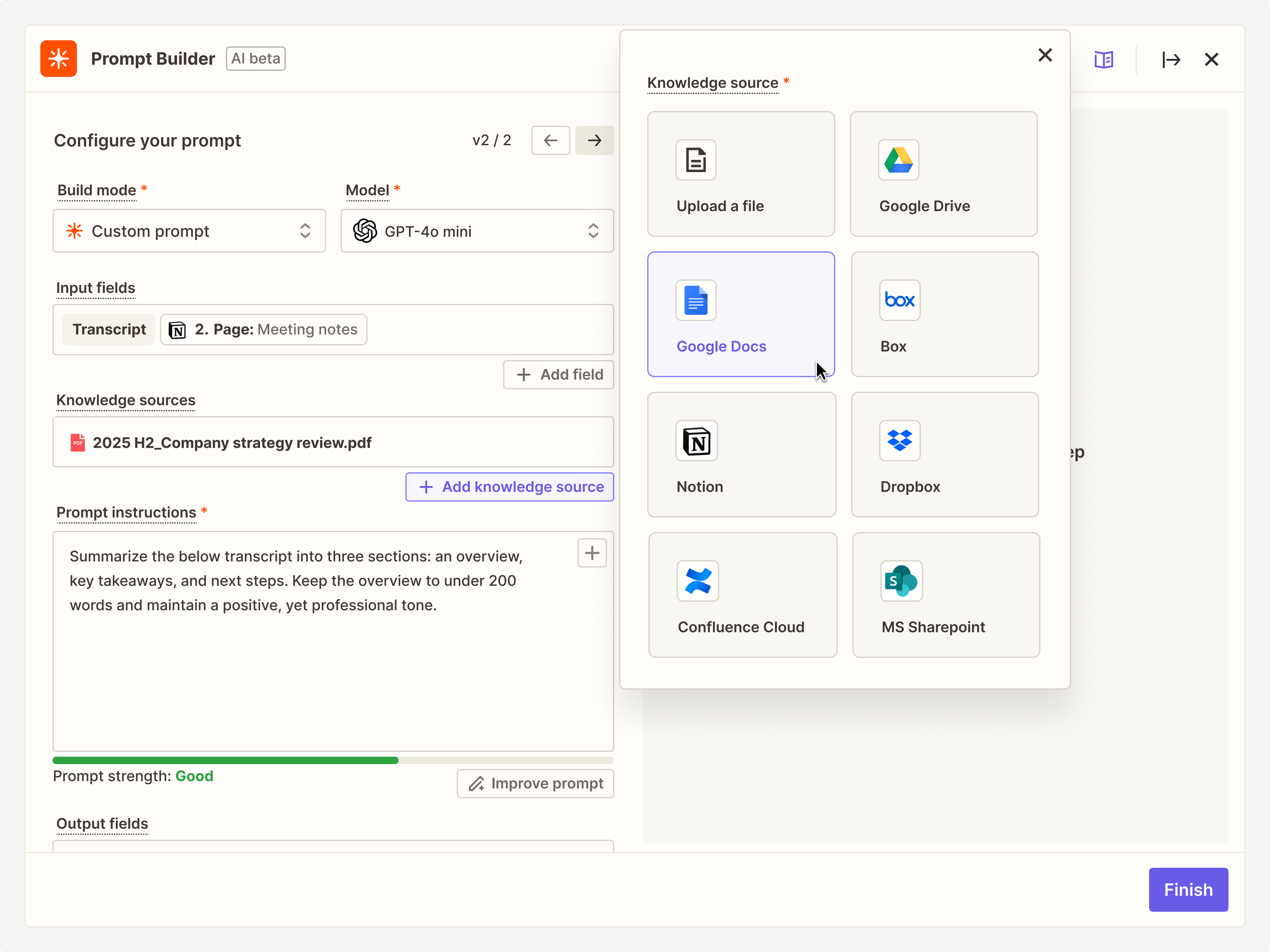

Subsequent projects further augmented and complemented AI by Zapier. For instance, I designed support for knowledge sources. Users could now upload a file or connect to certain third-party apps (including Google Drive, Notion, and Dropbox), which enabled users to provide detailed context for prompt instructions. I also thought through the management of these files, especially as it pertains to reusing these assets across account types and different levels of permissions.

An overlay menu showing the options available when adding a knowledge source to an AI by Zapier step

I also embarked on an extensive initiative to design human-in-the-loop capabilities. This was driven by the necessity to implement guardrails for AI-generated content (including that of AI by Zapier). Human-in-the-loop provided a native solution for users to pause a Zap and allow a human to intervene before a workflow continues. This proved especially valuable when AI outputs needed to be reviewed, edited, or approved.

A sample workflow diagram for how a user might add a human-in-the-loop step to review AI content generated from an AI by Zapier step